ZUBAIR IRSHAD

Research Scientist

ABOUT ME!

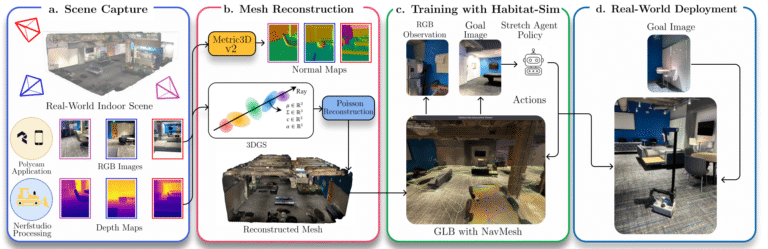

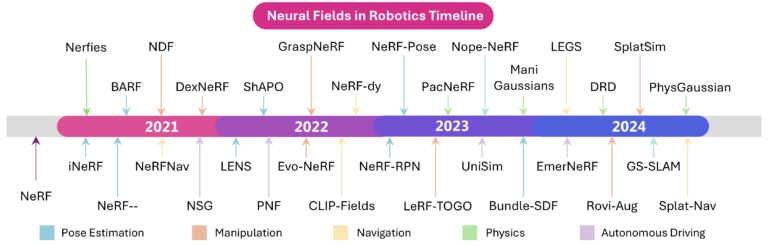

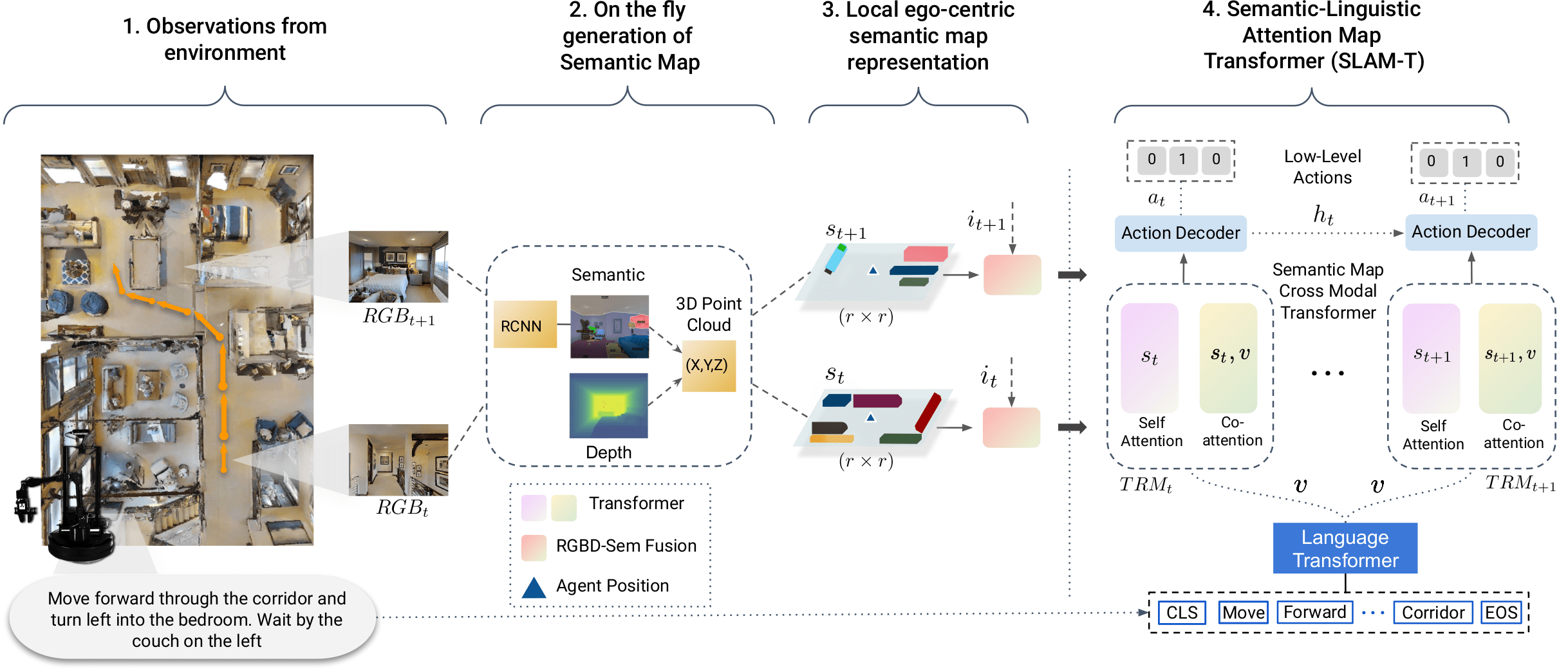

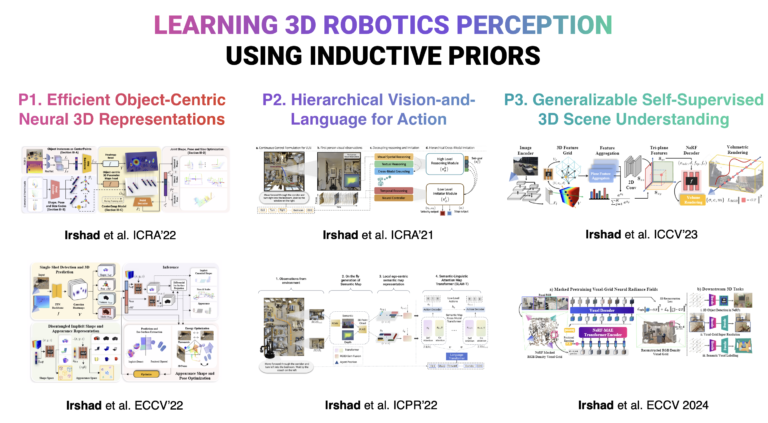

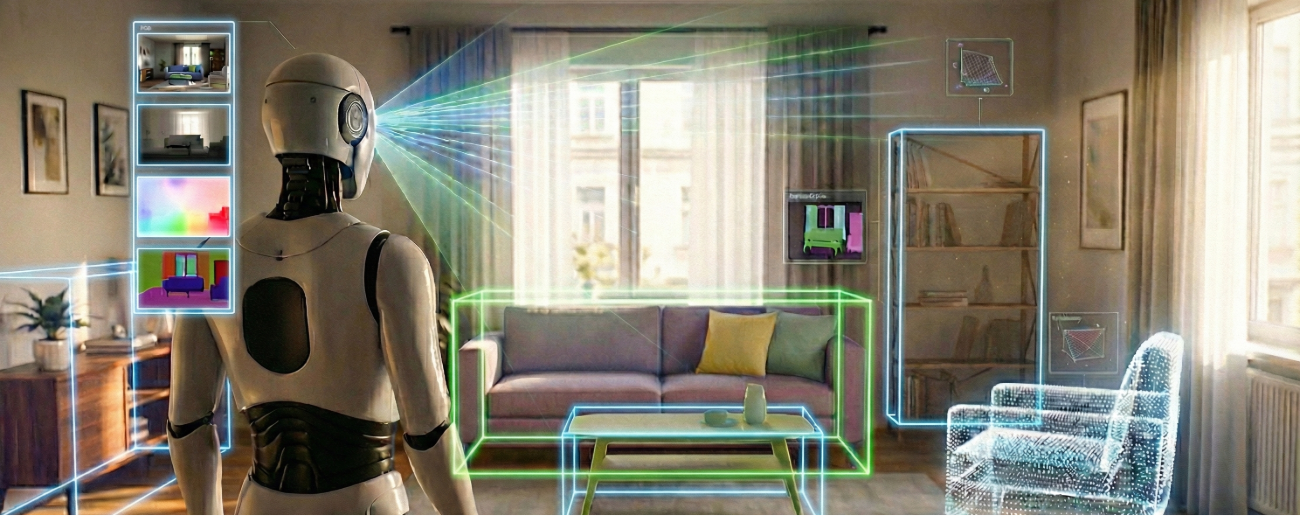

I am a Research Scientist at Toyota Research Institute working on Large Behavior Models and Deep-learning based 3D perception systems for Robotics. More recently, I have been a core contributor to the LBM 1.0 multi-task policy learning and post-training efforts. I received my PhD in the George W. Woodruff School of Mechanical Engineering at Georgia Institute of Technology. I was advised by Dr. Zsolt Kira from the Robotics, Perception and Learning (RIPL) Lab. My PhD thesis is titled Learning 3D Robotics Perception using Inductive Priors. My thesis is available here and the dissertation defense video here. My current research covers the following topics:

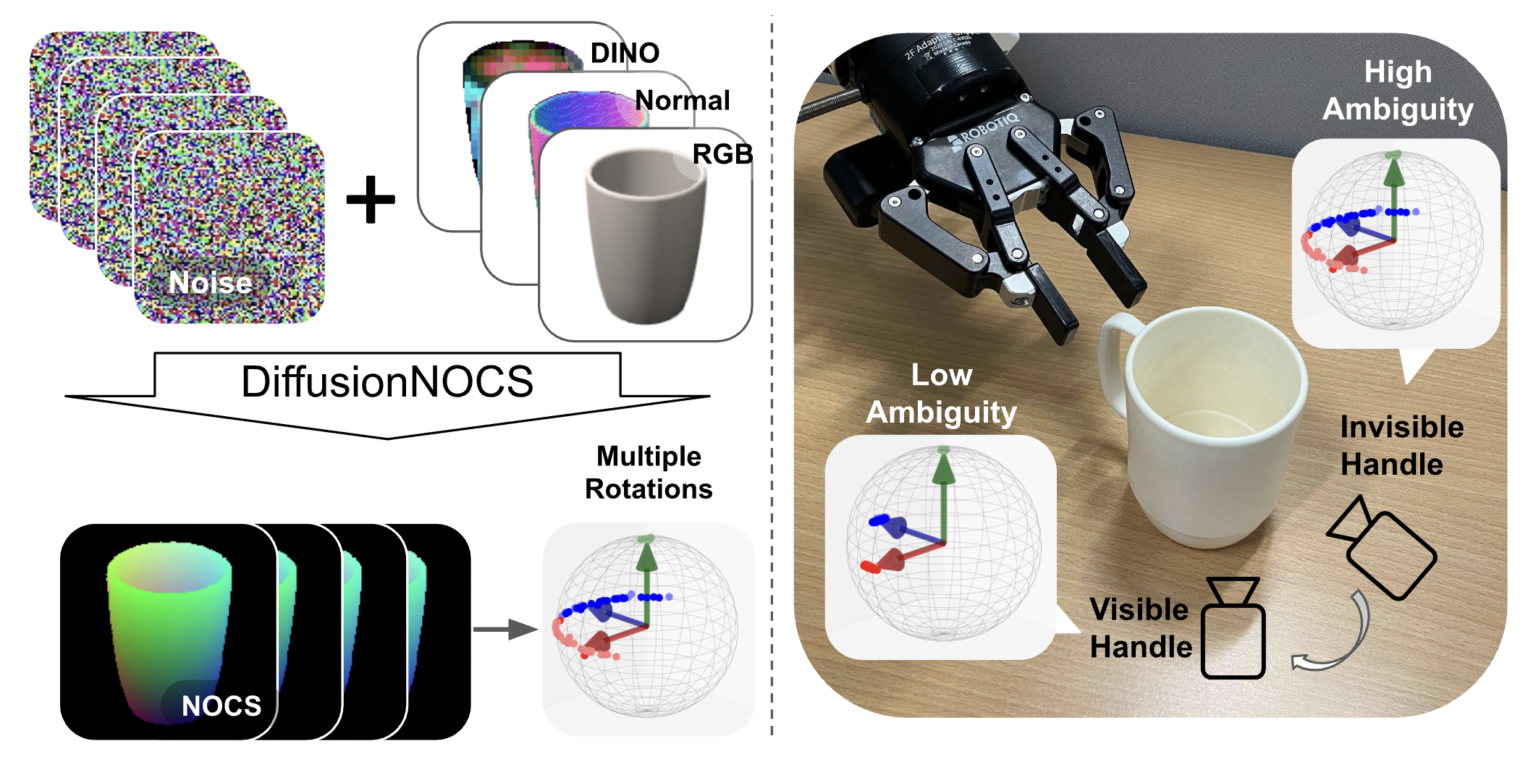

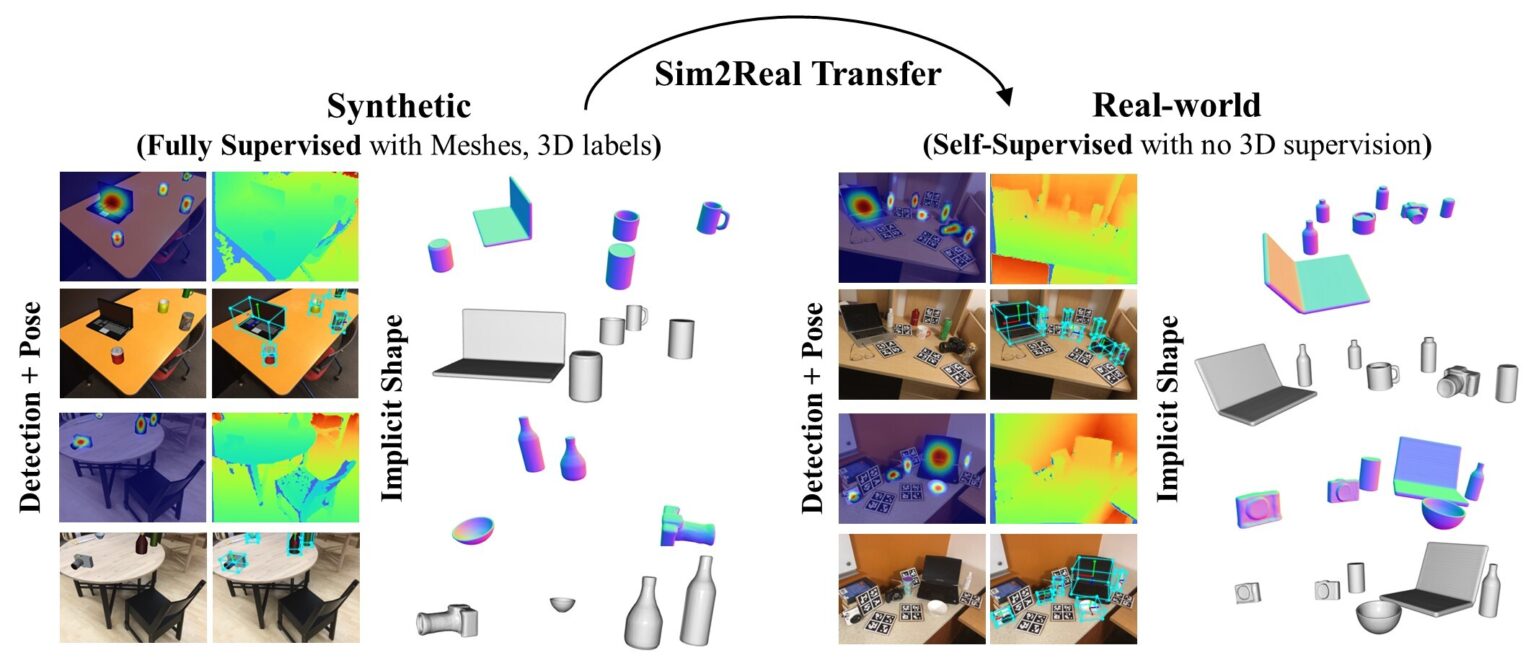

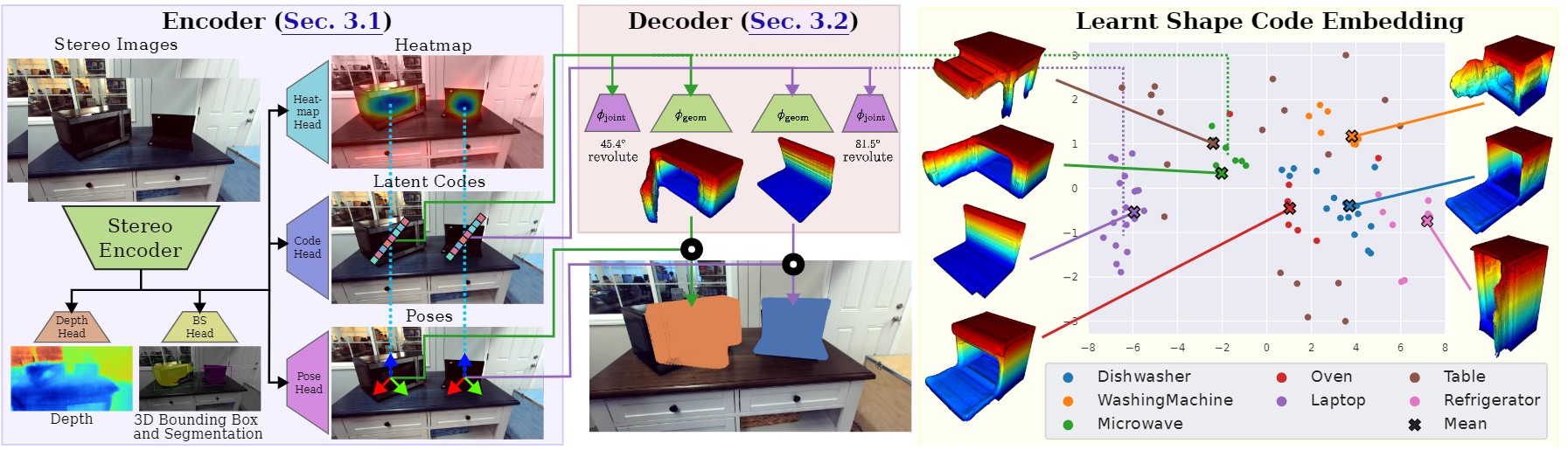

- 3D Perception: 3D Reconstruction, NeRF, Gaussian Splatting, Representation Learning, 6D pose estimation

- Generative AI: Diffusion Models, Generative Action Models, Data Augmentation and Data Generation for Robotics

- Multimodal AI: Vision-and-Language, Embodied AI, Semantic Understanding, Spatio-temporal learning

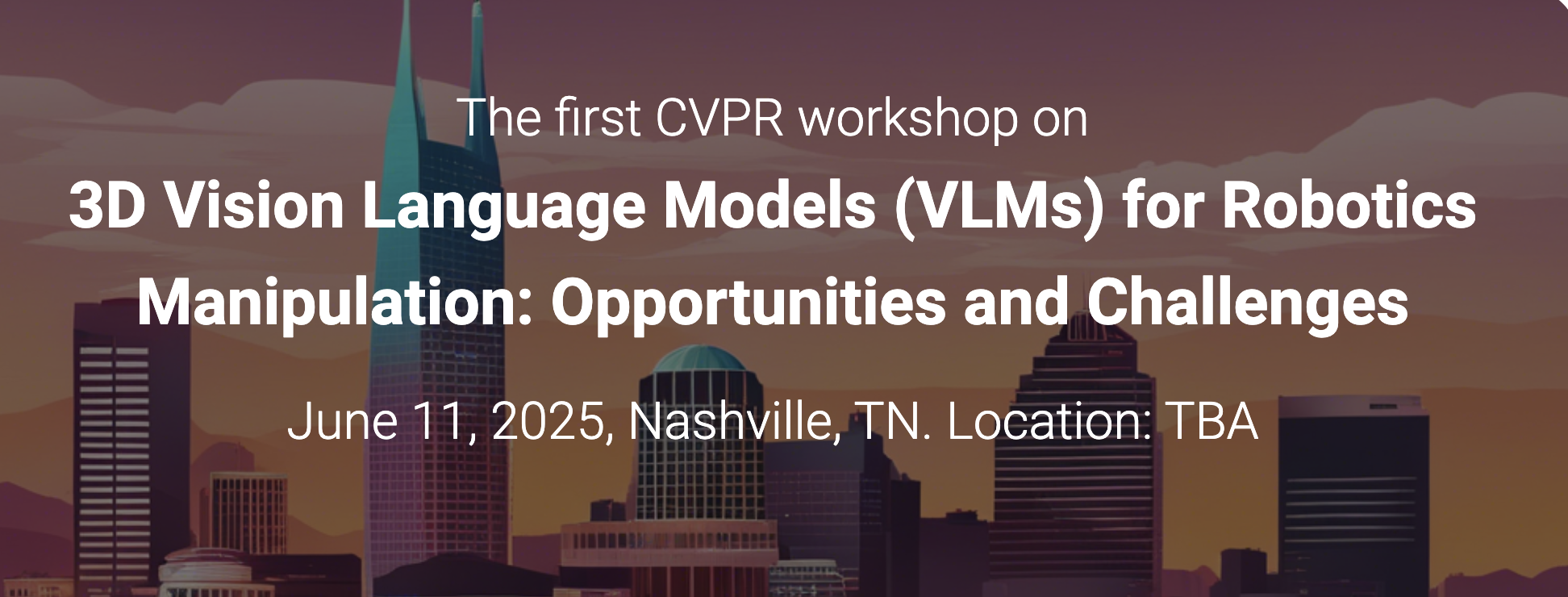

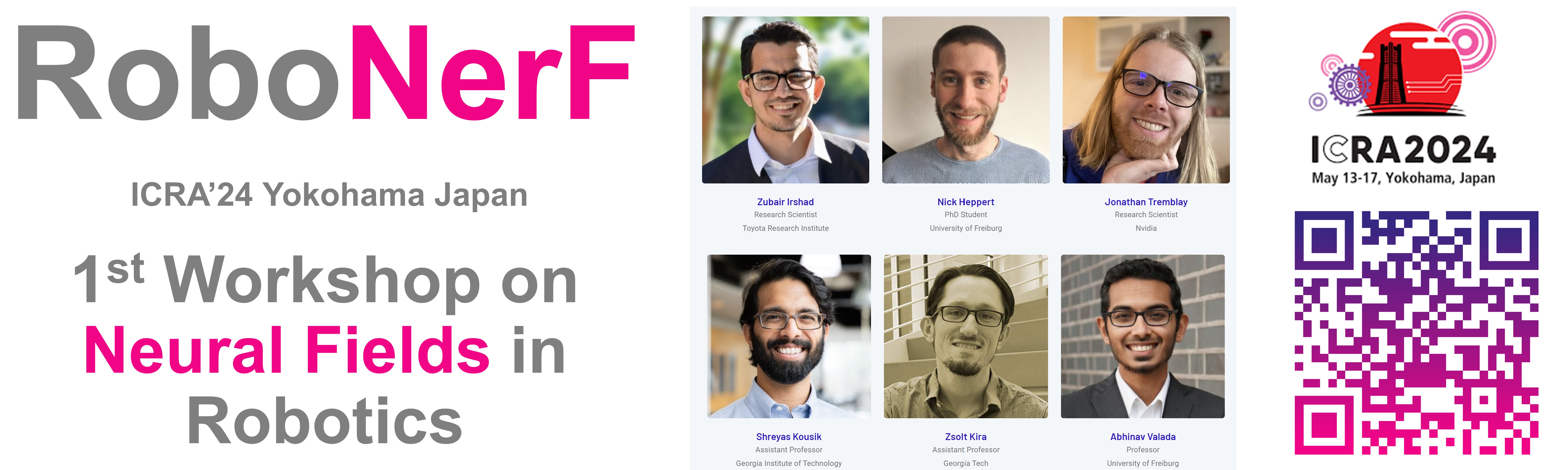

I'm also a technical reviewer for Machine Learning and Robotics Conferences including CVPR, ECCV, ICCV, ICLR, Neurips, ICRA, IROS, RSS, RA-L and the lead co-organizer of RoboNeRF Workshop at ICRA'24 and Robo 3D-VLM workshop at CVPR'25! Below you will find my projects portfolio. You can find my updated resume here.