Project Description

This project is a ROS based mobile robot navigator using sign recognition based on image classification. It has two major components:

- Image classification based sign recognition using SVM classifier:

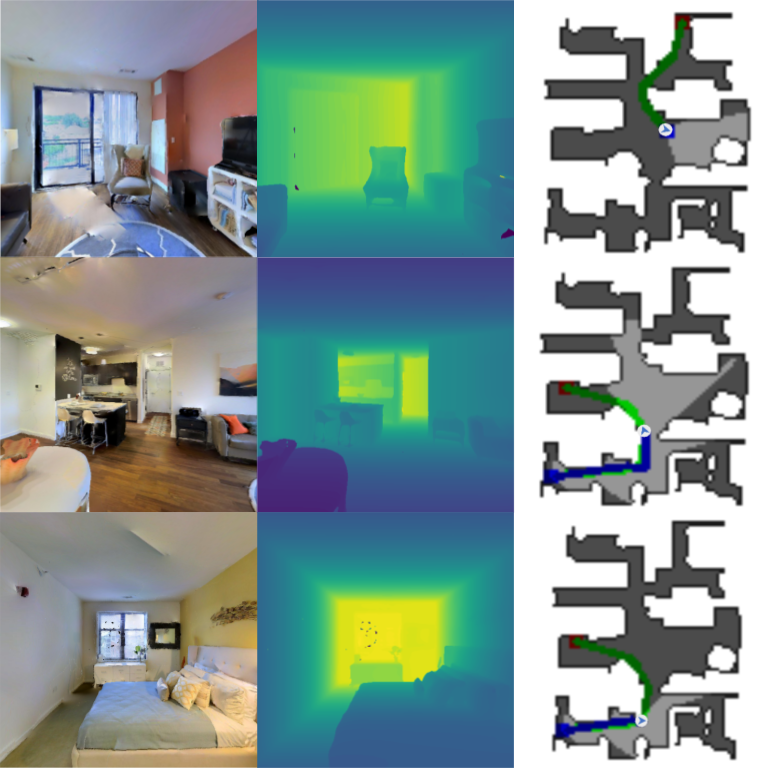

A set of 300 images were trained offline to classify 5 different road signs (turn right, turn left, stop, turn around, goal). SVM classifier was used tp obtain an accuracy of over 90% on unseen and diverse set of images taken in the real world. Sign recognition module transforms the images, extracts relevant features using canny edge detector and uses SVM classifier to train the images and stores the classifier for online usage.

- Navigating to Goal:

This module implements Proportional controller to minimize the angular and linear error to the detected signs. A state machine is implemented to transition to various states based on classification output value

Both of these components were implemented using ROS nodes as follows:

- Image Node:

Subscribes to image and LIDAR data and publishes linear error and angular error to the detected object which is closest to the center of screen. It also publishes SVM classifier predicted value

- Go to Goal Node:

Subscribes to odometry data and published values from image node. Publishes velocity commands to the robot

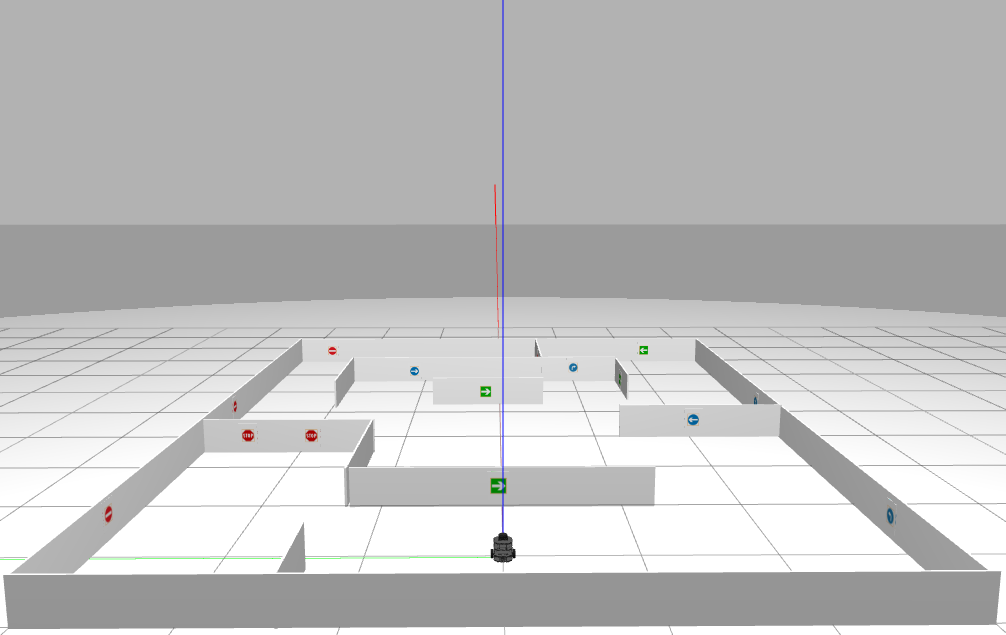

The complete system was tested in simulation on Gazebo (please see the video below). After successful simulation tests, the complete autonomous system was deployed on real robot (turtlebot3) at the robotics research course lab at Georgia Tech where a maze similar to the one in simulation but different sign locations was created.