Deep Reinforcement Learning based control of complex robotic agents

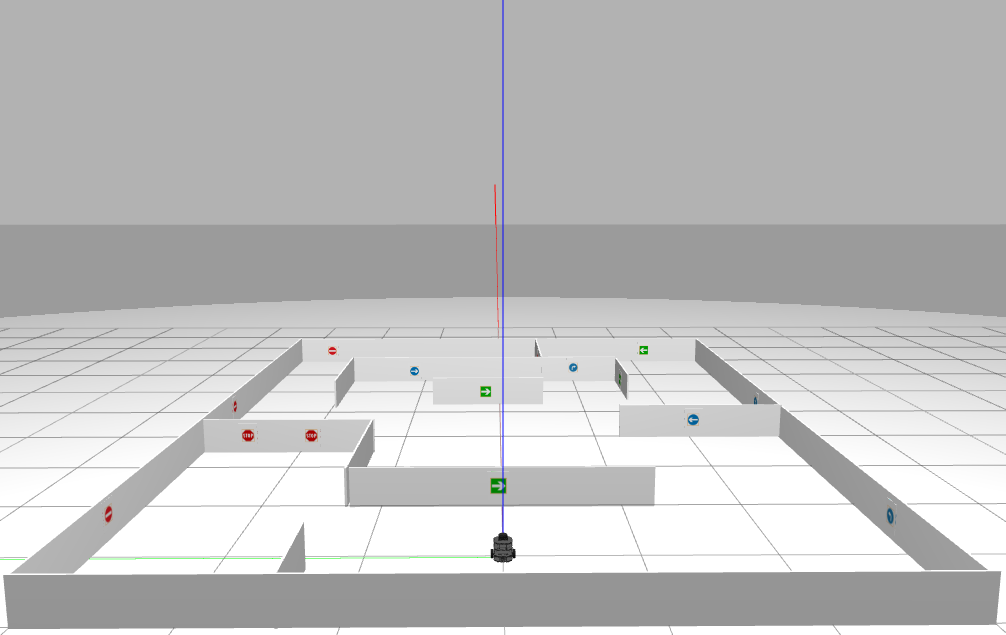

Deep Q Networks for exploration of an autonomous agent: In this project, an agent has to start from scratch in a previously unknown UnityML Enviornment and learn to navigate the enviornment by collecting the maximum amount of reward(yellow bananas) and avoid bad reward(blue bananas). A reward of +1 is provided for collecting a yellow banana, and a reward of -1 is provided for collecting a blue banana. The agent previously