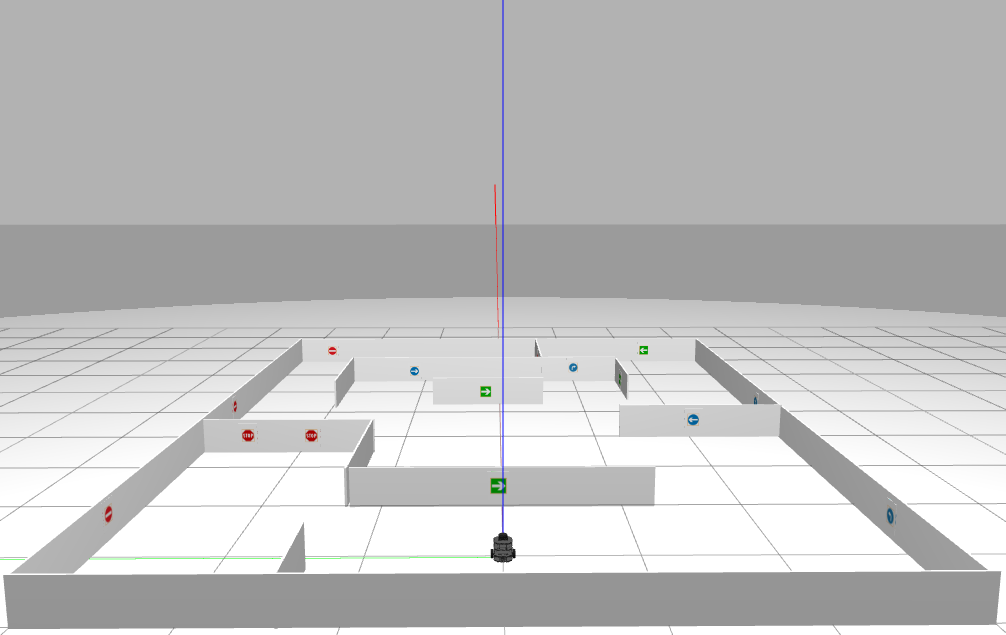

Complex robot maze navigation using image classification and ROS

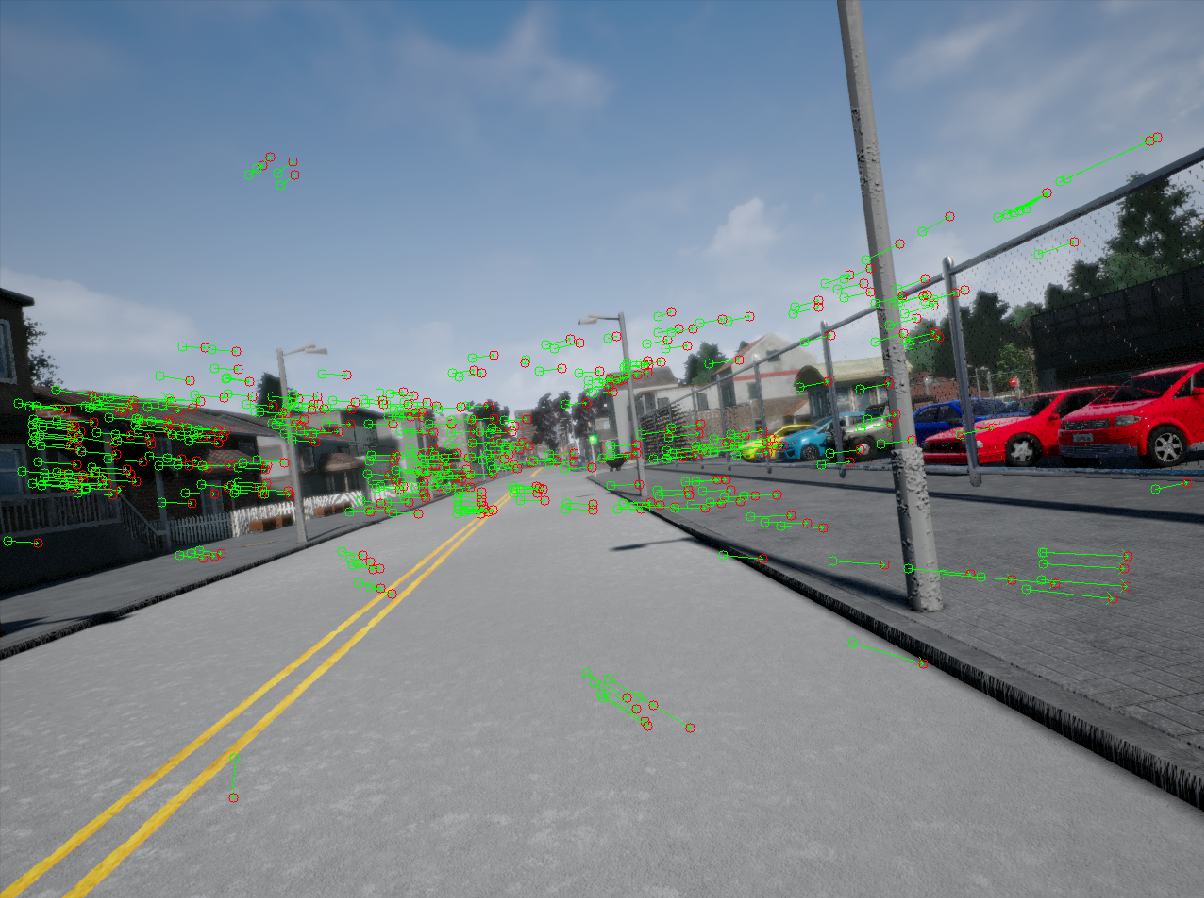

This project is a ROS based mobile robot navigator using sign recognition based on image classification. It has two major components: Image classification based sign recognition using SVM classifier: A set of 300 images were trained offline to classify 5 different road signs (turn right, turn left, stop, turn around, goal). SVM classifier was used tp obtain an accuracy of over 90% on unseen and diverse set of images taken