Embodied Visual Navigation in Habitat

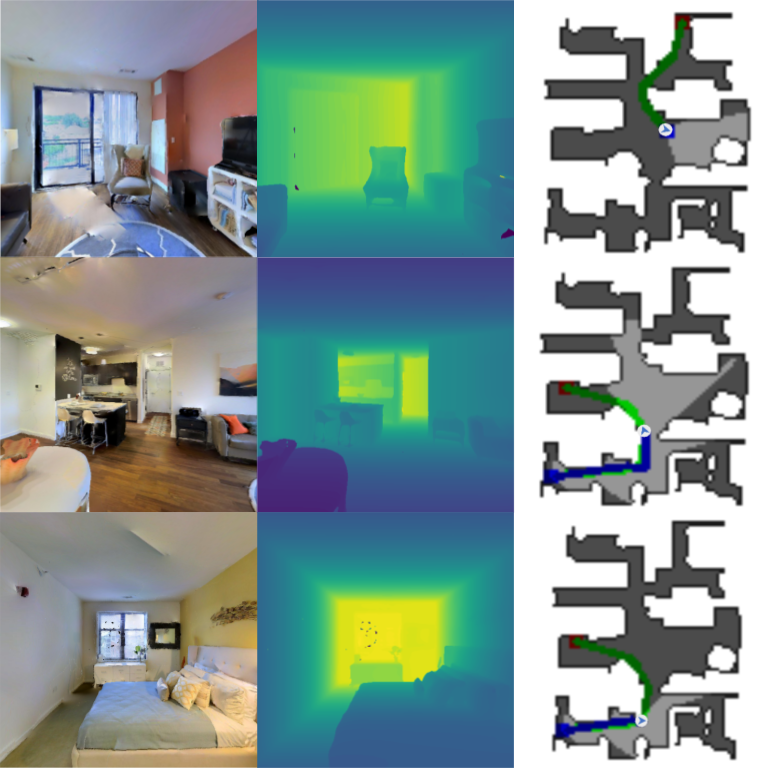

PDF Report: Supervised Learning Baselines for PointGoal Navigation in Photo-realistic indoor cluttered enviornments The aim of this work is to solve the embodied point goal navigation task in photo-realistic, indoor environments using Habitat. In this task, a virtual agent (robot) starts at a random position in an unknown environment. The agent is given the coordinates of a goal location. Primary aim of the agent is to navigate to the goal